Britton Plourde Develops Tools for Quantum Computer

Quantum mechanics 'could revolutionize' areas of computation, Plourde says

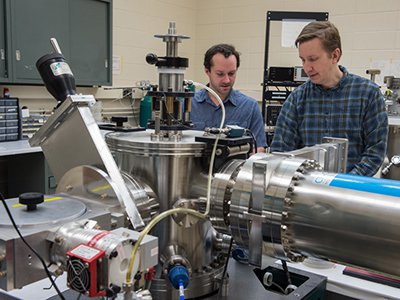

Britton Plourde, professor in the Department of Physics in the College of Arts and Sciences, has received a new grant from the National Science Foundation to work on developing tools for building a quantum computer. This is a collaborative project with a group in the Physics Department at the University of Wisconsin, Madison.

“One of the remarkable recent discoveries in information science is that quantum mechanics can lead to efficient solutions for problems that are intractable on conventional classical computers,” Plourde says. “While there has been tremendous recent progress in the realization of small-scale quantum circuits comprising several quantum bits (‘qubits’), research indicates that a fault-tolerant quantum computer that exceeds what is possible on existing classical machines will require a network of thousands or millions of qubits, far beyond current capabilities.”

A Syracuse faculty member since 2005, Plourde has been awarded multiple grants from the National Science Foundation, Army Research Office, DARPA and IARPA. He also is the recipient of an NSF CAREER Award and an IBM Faculty Award. Plourde earned a Ph.D. in physics from the University of Illinois at Urbana-Champaign, and is editor-in-chief of the journal Transactions on Applied Superconductivity, published by the Institute of Electrical and Electronics Engineers.

He answered a few questions about his research.

As I understand it, your research will try to advance the development of quantum computing—using quantum bits, or qubits, to power next-generation quantum machines. First, let’s talk about the upside. This will lead to much more powerful computers than we have now, correct?

Right now we’re at a level where it’s feasible to build small-scale quantum processors with superconducting circuits. IBM has just announced a 50-qubit processor that will be available in the coming months and Google and Intel are planning on 49-qubit systems for this year. There will be a big push to build even larger systems in the coming years. In addition, the performance of superconducting qubits has improved dramatically since the initial demonstrations in the early 2000s.

And now the difficult part. Qubits are notoriously unstable, so the problems lies in developing digital control, measurement and feedback circuitry that you can depend on.

Indeed, quantum states are unstable and difficult to preserve. Current quantum computers consist of a system of highly shielded and isolated quantum bits—inside an ultra-low-temperature refrigerator, in the case of the superconducting circuits that I work on. In the lab outside of this refrigerator we need lots of microwave electronics and digital hardware to control the quantum circuits. This makes it difficult to envision building up to significantly larger quantum systems, since the amount of room-temperature hardware for controlling the system will become overwhelming.

Also, the delays in getting signals into and out of the refrigerator can limit our ability to do feedback on the quantum circuits. The scheme that my collaborators and I are developing involves miniaturizing and moving much of the digital electronics hardware into the refrigerator near the quantum circuits. This has much more favorable properties for building up to larger systems.

What will the process be for developing the needed stability?

By moving the digital circuits that measure and control the quantum circuits inside the refrigerator, we will be able to detect certain changes in the quantum circuits with a digital readout, then apply different digital control signals, depending on the measurement.

This will be able to happen without needing the signals to pass out of the refrigerator, get processed by hardware in the lab, and send new control patterns down into the refrigerator. So, the entire feedback process will be more efficient and have a much smaller footprint.

Once large-scale quantum computers exist, what kinds of problems that are out of our reach now will they be useful for tackling?

There have been several initial demonstrations of quantum algorithms, including factoring 15 into 5 and 3. The factoring algorithm was one of the original ideas that kicked off research into quantum computers because there is no known efficient classical algorithm that can factor large numbers into primes. As the number gets really big, the time for the computation blows up rapidly.

If a number contained 2,000 bits—600 digits—the time needed to factor it would be longer than the age of the Universe. A quantum computer could factor such a large number in roughly a day, but the computer would need well over a million qubits, amongst other things, so it will take quite a while yet.

There is currently a lot of interest in quantum simulation—using a quantum computer to simulate another quantum system, such as complex molecules, which are difficult to simulate numerically on a classical computer. This wouldn’t necessarily need a million qubits, but could be done with say hundreds or thousands. Simulation will probably be the first breakthrough for quantum processors in which they perform faster than a classical approach. This could eventually lead to breakthroughs in medicine with the simulation of new drug molecules as well as materials science.

Conspiracy theorists think this is just another step in the march toward total control of our lives. What’s your opinion on that?

I have never come across such a conspiracy theory, but it’s important to realize that quantum computers will not be able to solve all problems faster than a classical computer—that’s a common misconception. There are many problems where a quantum computer would be slower, or it simply wouldn’t make sense to use. For example, you wouldn’t run a spreadsheet or write your term paper for history class on a quantum computer. So no, quantum computers won’t be making classical computers obsolete at any point, but they could revolutionize certain areas of computation.